To completely

validate the product, we need to run our test suite on the following

architectures :

Solaris (Intel)

Solaris (Sparc)

Windows NT (Intel)

Windows NT (Alpha)

Windows 95

Now since our

product is communicating between two machines, we need to confirm it

interoperates between any combination of the above architectures.

So we have the following combinations :

| Solaris Intel | Solaris Sparc | Windows NT Intel | Windows NT Alpha | Windows 95 | |

| Solaris Intel | X | X | X | X | X |

| Solaris Sparc | X | X | X | X | X |

| Windows NT Intel | X | X | X | X | X |

| Windows NT Alpha | X | X | X | X | X |

| Windows 95 | X | X | X | X | X |

To further

complicate our lives, we assume XFtp is written in Java, so we need to

test it on Java 2 and Java 1.1.8. This nicely increases our test

combinations from 25 to 50.

It soon becomes clear that as new variables are added, the number of

test combinations spirals rapidly out of control. It gets MUCH worse

when you are testing real distributed software running on multiple

machines. We currently run our tests on 16 different machines! (If we

limit ourselves to Solaris Intel and Solaris Sparc, this still gives 20

922 789 888 000 combinations!) Clearly some automation of this process

would be a good idea.

This is still only

half the problem. Each machine has to be installed with the software to

be tested. With the engineering department sometimes delivering new

versions 2 or three times a week, this also gets to be a very time

consuming process.

Step one is to write your each test case in a platform independant way. To do this, we developed a simple scripting language, very similar to C-shell, although it could be implemented as any language you want. All references to platform specific items are delayed by referring to one or more configuration files. A configuration is then built up for each test based on the architecture and operating system of the target machine.

So instead of directly executing a command within the test, we refer to variables which will only be defined at the time of running the test. For example, to start a Java Virtual Machine, instead of using :

execute("c:\jdk1.2\bin\java.exe TestCaseClass")

we would use :

JAVA_CMD=JAVA_CMD_AGENTNAME

execute(JAVA_CMD+"TestCaseClass")

In this way, we don't care at the test level what type of machine the test will run on. Somewhere higher up in the configuration hierarchy we might have a file which defines these values as :

IF (AGENT1_OS=="WindowsNT")

JAVA_CMD_AGENTNAME="c:\jdk1.2\bin\java.exe"

ELSE

JAVA_CMD_AGENTNAME="/usr/bin/java"

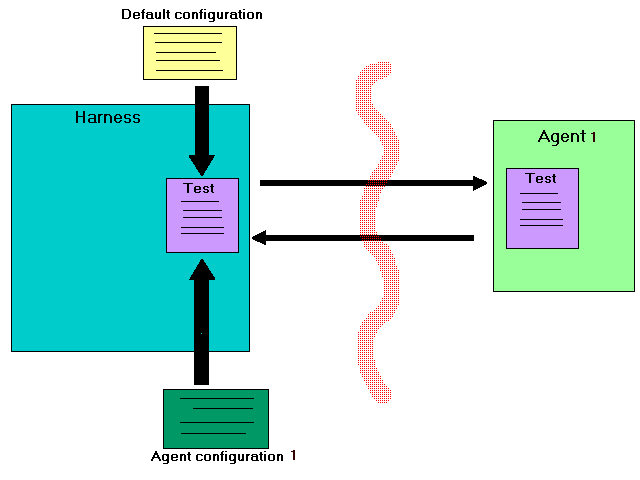

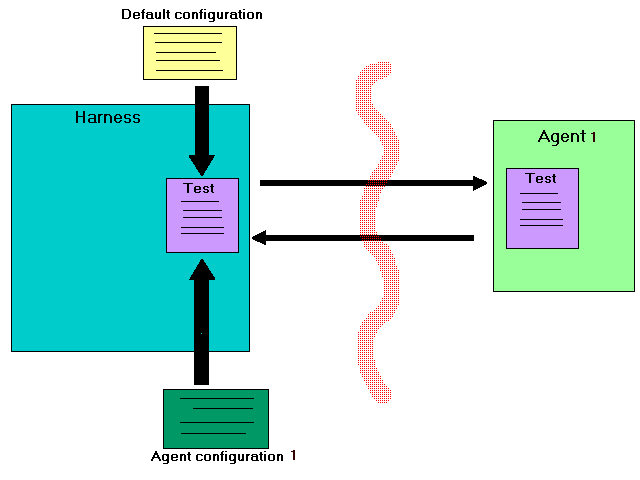

The second, and

possible more important step, is to transfer the test to whatever

machine we want to run it on (agent).

This was achieved by developing a relatively stupid agent that can

respond to a limited set of requests from the harness via TCP sockets.

Some examples are GETFILE, SENDFILE, DELFILE, EXECUTE etc. The test

script running on the harness may then use this command set to build up

a desired environment on the agent. We are able to transfer files,

unzip files, delete files, set environment variables, and any of the

other good things we need to run a test. Once the test is run, a result

is decided on, and sent back to the harness for processing.

Since the agent is written in Java, it runs on any architecture

supporting a JVM, included restricted ones running pJava, kJava nad

various other slimmed down versions.

The data flow

outline is presented in Figure 1 :

Enter the QAT Tool.

It is a test harness which centralises all the tests onto the platform

of your choice(it's 100% Pure Java), and all you need to do is run a

simple agent on your test machine, which listens on a TCP socket for

commands from the Harness.

Tests are written in the form of a specially designed scripting

language, but this may be redefined as per user needs by overriding the

parser interface.

The tests have available to them, a limited, but powerfull set of

commands which may be run on an agent machine, such as sending and

recieveing files, starting, stopping and killing processes etc. All

output of the test running on the agent is stored, and sent back to the

harness if requested.

So all tests, associated files, and other bits and pieces used to run

the suite are stored locally on a single machine, and are sent to the

agent machine as required.

All that is required to run ANY test suite on ANY machine, is a Java

Virtual Machine running the QAT Agent. It is also possible to get the

agent to update itself when new software is available, meaning once a

machine is running the agent, it need never be reconfigured.